AI has changed how we write code. Developers are now shipping faster, experimenting more, and relying on AI to handle parts of their workflow that used to take hours.

However, as speed increases, so does risk.

More code means more chances for vulnerabilities to slip through. And if testing still happens at the end of the process, it’s already too late. That’s where AI-powered SAST tools come in.

They help teams spot and fix issues early, keeping up with the same speed that AI brings to development.

In this article, we break down the 10 leaders in AI SAST tools. We explore the core features of each tool and the unique ways they implement AI to enhance vulnerability discovery, prioritization, and remediation.

Best AI-Powered SAST Tools at a Glance

- Best overall / low noise & Auto-Fix:

- Best for strict data privacy (no external AI calls):

- Best for GitHub-native workflows:

- Best for fast IDE suggestions (quick fixes):

- Best for customizable rules & detection:

- Best for on-prem / bring-your-own-LLM:

- Best for enterprise governance & legacy stacks:

- Best “AI-first” scanning approach:

- Best validated auto-remediation in dashboard:

What is SAST?

Static Application Security Testing (SAST) is a methodology for analyzing an application's source code, bytecode, or binary to identify vulnerabilities and security flaws early in the software development lifecycle (SDLC). SAST finds vulnerabilities within the source code, which means that it is often the first defense against insecure code.

SAST vs. DAST

While SAST analyzes code from the inside out, DAST (Dynamic Application Security Testing) tests from the outside in.

Think of it this way:

- SAST tools examine your source code before an application runs — catching issues like insecure functions, hardcoded credentials, or logic flaws during development.

- DAST tools, on the other hand, runs security tests while the application is live — probing it like an attacker would to find real-world exploitable weaknesses such as SQL injection, XSS, or authentication bypasses.

Both are essential, but they serve different purposes in the SDLC. To learn more read SAST vs DAST what you need to know

The Advantages of Static Application Security Testing Tools

SAST tools are one of the most effective ways to find and fix vulnerabilities before your code ever runs. Here are its key advantages:

- Early Detection in the SDLC: SAST runs during coding or build time, enabling developers to fix vulnerabilities before they reach production. This “shift-left” approach reduces the overall difficulty, cost and time of remediation.

- Comprehensive Code Coverage: Because SAST examines the entire codebase including dependencies, configuration files, it can identify flaws that dynamic testing might miss.

- Developer-Friendly Feedback: The best modern SAST tools integrate with IDEs, Git repositories, and CI/CD systems to provide in-line feedback, code suggestions, and auto-fix recommendations without slowing down development.

- Supports Compliance and Audit Readiness: SAST helps organizations meet requirements from frameworks like SOC 2, ISO 27001, GDPR, and OWASP ASVS by proving that secure coding practices are in place.

- Continuous Improvement Through Automation: AI-powered SAST tools can automatically learn from past vulnerabilities, reducing false positives and helping teams continuously strengthen their code over time.

In short, SAST empowers developers to build secure software by design not as an afterthought.

What vulnerabilities does SAST find in your code?

There are many different vulnerabilities SAST can find and it depends on the coding practices used, technology stack and frameworks. Below are some of the most common vulnerabilities a SAST tool will typically uncover.

SQL Injection

Detects improper sanitization of user inputs that could lead to database compromise.

Example Python code vulnerable to SQL Injection:

# Function to authenticate user

def authenticate_user(username, password):

query = f"SELECT * FROM users WHERE username = '{user}' AND password = '{password}'"

print(f"Executing query: {query}") # For debugging purposes

cursor.execute(query)

return cursor.fetchone()

The above code is vulnerable because the query variable uses string interpolation (f-string) and directly inserting user input via '{username}' which enables any malicious actor to inject SQL code from their input to the DB.

Cross-Site Scripting (XSS)

Identifies instances where user inputs are incorrectly validated or encoded, allowing injection of malicious scripts.

Example of client-side javascript code vulnerable to XSS:

<script>

const params = new URLSearchParams(window.location.search);

const name = params.get('name');

if (name) {

// Directly inserting user input into HTML without sanitization

document.getElementById('greeting').innerHTML = `Hello, ${name}!`;

}

</script>

The code above is vulnerable because it uses .innerHTML to directly insert user input into HTML without sanitization.

Buffer Overflows

Highlights areas where improper handling of memory allocation could lead to data corruption or system crashes.

Example C code vulnerable to buffer overflow:

1#include

2void vulnerableFunction() {

3 char buffer[10]; // A small buffer with space for 10 characters

4

5 printf("Enter some text: ");

6 gets(buffer); // Dangerous function: does not check input size

7

8 printf("You entered: %s\n", buffer);

9}

10

11int main() {

12 vulnerableFunction();

13 return 0;

14}

The above code is vulnerable because it uses the gets()C function which is dangerous. The gets() function doesn’t know the size of the buffer it's reading into, which can lead to reading more data than the buffer can hold, resulting in a buffer overflow.

Insecure Cryptographic Practices

Finds weak encryption algorithms, improper key management, or hardcoded keys.

Example of vulnerable Python cryptography code using obsolete MD5 hash function:

import hashlib

def store_password(password):

# Weak hashing algorithm (MD5 is broken and unsuitable for passwords)

hashed_password = hashlib.md5(password.encode()).hexdigest()

print(f"Storing hashed password: {hashed_password}")

return hashed_password

Altogether, SAST tools provide valuable insights, enabling developers to fix issues before they become critical.

How Static Application Security Testing (SAST) Works

You’ve learned what SAST is, and what kinds of vulnerabilities it finds, now let's see how it works behind the scenes.

The SAST process typically follows these four core steps:

Step 1: Code Parsing and Modeling

The tool scans the application’s source code, bytecode, or binaries to create a structured representation (an abstract syntax tree or data flow graph). This helps it understand how the code is built, how data moves through it, and where security controls should exist.

Step 2: Rule-Based Analysis

Next, the tool applies a set of security rules and patterns to identify risky code constructs.

Step 3: Vulnerability Correlation and Prioritization

Not every finding is critical. SAST tools analyze context:

- where the vulnerability exists,

- how it could be exploited, and

- whether it affects sensitive data

To reduce false positives and highlight what actually matters.

Step 4: Reporting and Developer Feedback

Finally, the results surface. Developers can act on them immediately, keeping security integrated in the daily workflow rather than treated as a separate phase.

All these steps today are taken to the next level with AI.

How AI is Enhancing SAST Tools

Right now you can’t get away from AI buzz (and BullSh*t). It can be difficult to know exactly how AI is being implemented in security tools.

Right now there are three trends with AI as it relates to SAST tools:

- AI to improve vulnerability detection: AI models trained on large datasets of known vulnerabilities improve the accuracy of identifying security issues while reducing false positives.

- AI to create automated prioritization: AI helps rank vulnerabilities based on severity, exploitability, and potential business impact, allowing developers to focus on critical issues first.

- AI to provide automated remediation: AI provides context-aware code fixes or suggestions, speeding up the remediation process and helping developers learn secure coding practices.

Next, we are going to compare some of the leaders in AI-powered SAST and explain the different ways these tools are implementing AI to enhance security.

Top 10 AI-Powered SAST Tools

Here are 10 industry leaders that are using AI in different ways to enhance the capabilities of traditional SAST (In alphabetical order)

TL;DR:

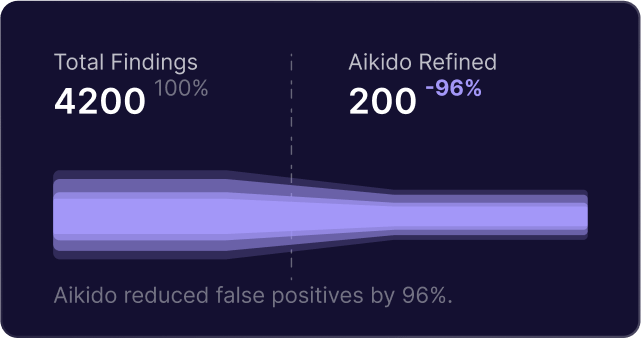

Aikido takes the #1 spot as a SAST solution that goes beyond code scanning. For small to medium enterprises, you can get everything you need to secure your IT estate in one suite. For enterprises, Aikido offers SAST alongside best-in-class security products, so you can pick which module you need and unlock the platform when you're ready.

It uses AI to automatically fix vulnerabilities and filters out the noise (few false positives), so dev teams only see real issues. Aikido is the no-nonsense choice for CISOs, CTOs and developers who want fast, smart code security.

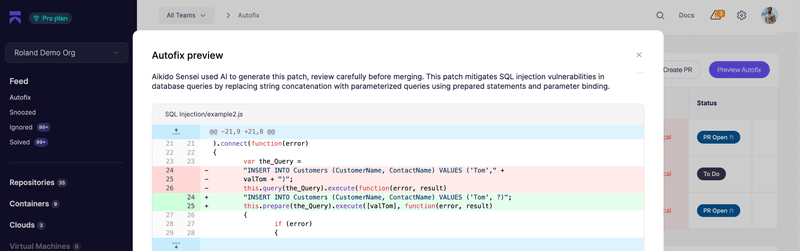

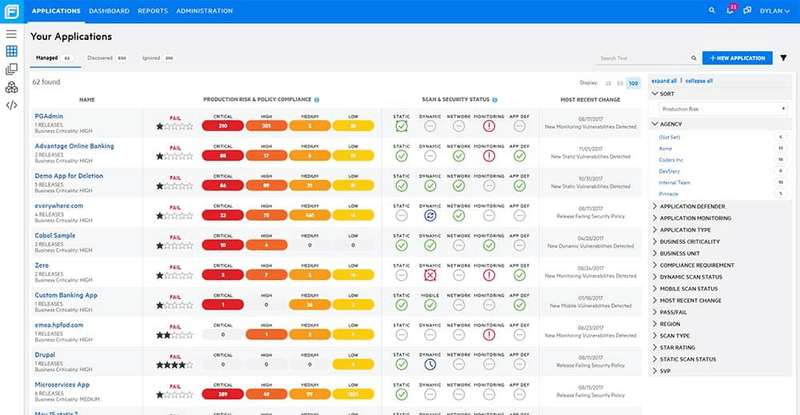

1. Aikido Security SAST | AI AutoFix

Core AI Capability | Auto Remediation (Dashboard + IDE)

Aikido Security uses AI to create code fixes for vulnerabilities discovered by its SAST scanner and can even generate automated pull requests to speed up the remediation process.

Unlike other tools, Aikido does not send your code to a third-party AI model, never stores or uses it to train AI, runs entirely on local servers, and has a unique method of ensuring your code does not leak through AI models.

Aikido first applies its own security rules to filter out false positives, and then uses a purpose-tuned LLM only to verify and refine suggestions. All analysis happens in a secure sandbox, so once the suggested remediation has passed validation, a pull request can be automatically created before the Sandbox environment is destroyed.

Aikido’s AutoFix also provides confidence scores, which are calculated without learning from your code so developers can make informed decisions.

Key features:

- Low false-positive / noise reduction: Aikido emphasizes high-confidence findings by filtering out non-security alerts and “cry-wolf” warnings, and uses an AI-based triage engine to reduce false positives (up to ~95% reduction).

- IDE & pull-request / CI/CD integration: Aikido SAST checks integrate directly into developer workflows, with inline feedback in IDEs and PR comments, and gating in CI/CD pipelines.

- AI-assisted auto-fix / remediation suggestions: For many vulnerabilities, Aikido AI AutoFix can generate fixes or patches automatically (or suggest them) to accelerate remediation.

- Context-aware severity scoring & custom rules: Issues are prioritized based on context (e.g. whether a repo is public or handles sensitive data), and users can define custom rules tailored to their codebase.

- Multi-file taint-tracking & broad language support: It performs cross-file analysis (tracking tainted input across modules), supports many major languages out of the box, and doesn’t require compilation.

- And more.

Best for: CISOs that are aware of the needs of developers, from large enterprises, startups and scaleups.

Pros:

- Built for developers with focus on reducing security cognitive workload across the SDLC

- Utilizes AI for code fixes.

- Generates automated pull requests.

- Ensures code privacy by not sending it to third-party AI models.

- Unified platform covering multiple security layers (SAST, SCA, IaC, secrets, runtime, cloud) so you don’t need to cobble together many separate tools

- Easy setup and integration into existing developer workflows

- Transparent pricing and good value with no hidden costs

Cons:

None noted.

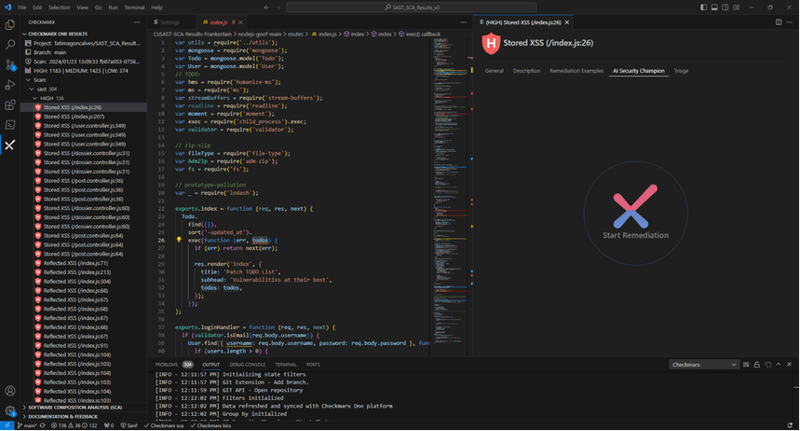

2. Checkmarx

Core AI Capability | Auto Remediation (IDE only)

Checkmarx SAST tools can provide AI-generated coding suggestions to developers within their IDE. The tool connects to ChatGPT, transmits the developer's code to the OpenAI model and retrieves the suggestions. This method makes the process of querying ChatGPT easier but does not add any proprietary processes giving it limited capabilities right now.

WARNING:This use-case sends your proprietary code to OpenAI and may not meet compliance standards.

Key features:

- Multi Language Support: Capable of scanning a wide range of programming languages including Java, JavaScript, and Python with deep analytical coverage.

- Highly Scalable: Built to efficiently manage large and complex projects without compromising speed or accuracy.

- Seamless CI/CD Integration: Integrates smoothly into CI/CD pipelines, allowing teams to detect vulnerabilities early in the development cycle.

Best for: Organizations willing to accept (or manage) the risk of sending snippets of proprietary code to external AI services (like ChatGPT) for remediation suggestions

Pros:

- Provides AI-generated coding suggestions within IDEs by connecting to ChatGPT.

- Good ecosystem of integrations (IDE, CI/CD, SCM) facilitating adoption by developers.

- Real-time IDE scanning and ASCA (AI Secure Coding Assistant) provide immediate feedback (though limited) to catch issues early.

Cons:

- Sends proprietary code to OpenAI, which may not meet compliance standards.

- Complexity / learning curve can be high for new users or small teams lacking strong AppSec maturity

- Cost/licensing can be a barrier; it is often priced at the premium end, which might be hard for smaller orgs to justify

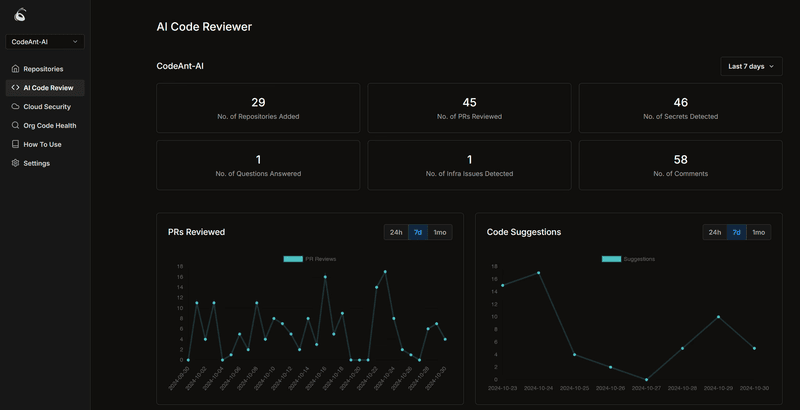

3. CodeAnt AI

Core AI Capability | Improved Detection (Dashboard)

CodeAnt is a code security and quality tool that entirely uses AI for its discovery of code vulnerabilities and suggested fixes. CodeAnt does not provide documentation on how their AI models work but generally uses AI as their core detection engine, this can slow down detection, particularly in large enterprises.

Key features:

- AI-driven SAST / security scanning: Triggered on pull requests to detect vulnerabilities and bugs.

- Code health & quality analysis: Covers code smells, duplication, and complexity checks alongside security scans.

- Git platform integration: Works with GitHub, Bitbucket, GitLab, and Azure DevOps.

Best for: Teams that prioritize speed & automation in code review and security, and want to offload much of the manual review effort.

Pros:

- Highly automated & AI-centric, which can speed up detection, code reviews, and remediation work

- Unified toolset which includes,security, Code quality, secrets, SCA, IaC in one platform

- Integration into development workflows (PRs, CI gating, dashboards) → less context switching

Cons:

- Limited integrations compared to other tools

- Lack of transparency about how the AI models work internally

- Performance may degrade / lag on very large repositories or enterprise-scale codebases

- Difficulty tuning or customizing rules beyond what the AI provides (less control than classical SAST in some respects)

- “Black box” nature could reduce developer trust or make debugging of false positives harder

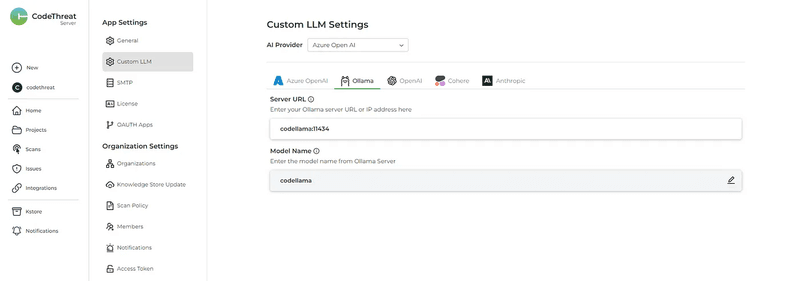

4. CodeThreat

Core AI Capability | Automated Prioritization (Dashboard)

CodeThreat offers on-premise static code analysis and provides AI-assisted remediation strategies. A core difference is that CodeThreat allows you to integrate your own on-premise AI model into their tool. This has the advantage of not sending data to a third party but means it can only offer genetically trained AI models right now and you need to be running an on-premise AI LLM like ChatGPT.

Key features:

- On-premises static code analysis: Runs scans locally without sending code to external cloud systems.

- AI-assisted remediation: AI-assisted remediation: Provides automated fix proposals and suggestions based on internal models.

- VS Code plugin support: Lets developers trigger scans and view fix suggestions directly in their IDE.

Best for: Organizations with strict data sovereignty / compliance requirements that cannot allow code to leave premises

Pros:

- Data control & privacy: Because you can run AI models internally, you avoid sending sensitive code to third parties

- Flexibility / customization: Ability to integrate your own model gives you control over inference behavior or tuning

- Local performance (potentially): For internal models, latency and throughput can be optimized without relying on API calls

Cons:

- If your on-prem model has lower capacity or lacks domain training, false positives / false negatives may be worse

- Limited to generically trained AI models;

- Requires running an on-premise AI LLM like ChatGPT.

5. OpenText™ Static Application Security Testing (SAST)

Core AI Capability | Improved Prioritization (Dashboard)

OpenText™ Static Application Security Testing (Fortify)scans source code for vulnerabilities and gives users the option to adjust thresholds when an alert is made. For example, likeliness of exploitability. Fortify’s AI Auto assistant reviews the previous thresholds assigned to vulnerabilities and makes intelligent predictions on what the thresholds for other vulnerabilities should be.

Note: Fortify Static Code Analyzer does not use AI to discover vulnerabilities or suggest fixes for them, instead it uses it to predict administrative settings used in the admin panels.

Key features:

- Multiple language support: Supports 33+ programming languages and frameworks, analyzing code (or bytecode) paths to detect vulnerabilities.

- IDE, build tool, and CI/CD integrations: Enables scans to run early in pull requests and builds.

- Flexible deployment: Supports on-premises, private cloud, hybrid, or hosted options

Best for: Teams that already have OpenText solutions integrated in their systems.

Pros:

- Strong, mature static analysis engine with broad language and framework support

- Extensive customizing options (policies, thresholds, taint flags, rules) to tailor noise control and precision

- Centralized governance, audit tracking, dashboards, integration with ticketing & DevSecOps tools

- Strong reputation, enterprise market presence, proven track record

Cons:

- The AI component is limited. It does not generate or suggest remediation code, only helps with classification / threshold settings

- Setup, tuning, and configuration effort can be substantial (especially for large, diverse codebases)

- The complexity and licensing cost can be relatively high. Also pricing is not publicly available and requires direct contact with their sales team for a quote.

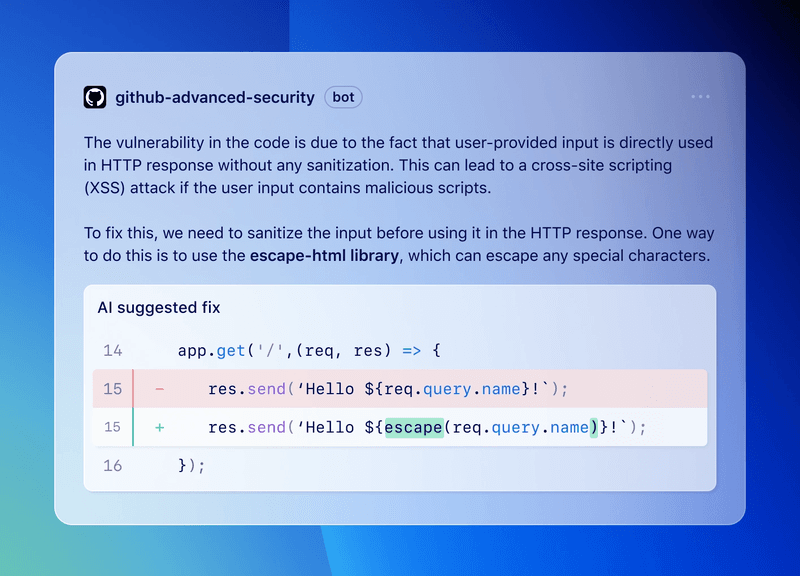

6. GitHub Advanced Security | CodeQL

Core AI Capability | Auto Remediation (IDE + Dashboard)

GitHub CodeQL is a static code scanner that uses AI to create intelligent auto-remediation in the form of code suggestions. Developers can accept or dismiss the changes via pull requests in GitHub CodeSpaces or from their machine. It is part of GitHub Advanced Security.

Key features:

- Copilot Autofix / AI-powered suggestions: For supported alerts, AI generates code changes (with explanations) that can span multiple files and dependency changes.

- Support for multiple languages: For both scanning and autofix suggestions

- Configurable / opt-out behavior: You can disable autofix or tailor it via policies.

Best for: Development teams already using GitHub / GitHub Advanced Security and wanting seamless integration of security and remediation in their workflow.

Pros:

- You do not need a subscription to GitHub Copilot to use GitHub Copilot Autofix.

- Aligns security and development in one platform (GitHub) which means less need for external SAST tools for many cases.

- Smooth developer experience: suggestions appear in the same PR view, minimizing context switching.

Cons:

- Code File size: If the affected code is within a very large file or repository, the context provided to the LLM may be truncated.

- Limited coverage: While Copilot Autofix supports a growing list of languages and CodeQL alerts, it doesn't cover every possible alert type or language.

- In complex codebases, suggestions may not account for all interdependencies, side effects, or architectural constraints

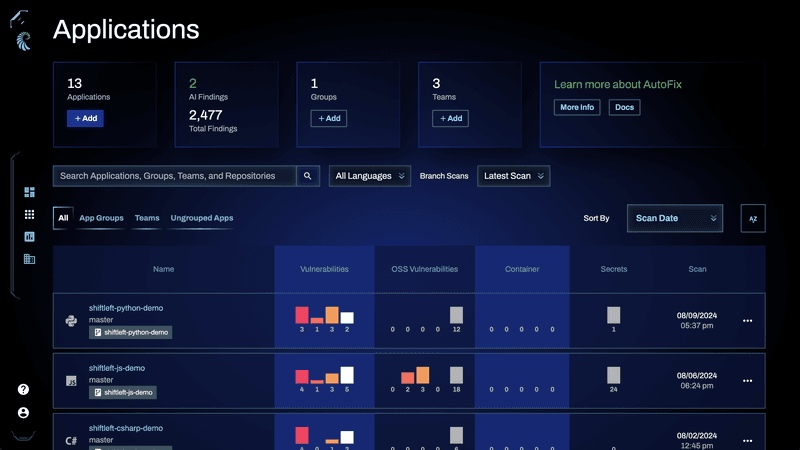

7. Qwiet AI | SAST Code

Core AI Capability | Auto Remediation (Dashboard)

Qwiet AI SAST is a rule-based static application security testing tool that leverages AI to auto-suggest remediation advice and code fixes for code vulnerabilities. Its core offering is its three-stage AI agents which Analyze the issue, Suggest a fix, and then validate the fix.

Key features:

- Fast scanning at scale: claims to scan millions of lines in minutes, targeting performance improvements over legacy SAST tools.

- AI remediation suggestions: for many findings, Qwiet offers context-aware code suggestions (and validates them) to reduce manual fix effort.

- Unified scan coverage: includes detection for code, containers, secrets, dependencies (SCA) in the same platform.

Best for: Teams that prioritise automated remediation support.

Pros:

- Employs a three-stage AI agent process.

- Users value the thorough documentation and responsive support from Qwiet AI, facilitating seamless integration into CI/CD pipelines.

Cons:

- It’s a relatively new / newer tool so maturity, ecosystem support, and long-term stability might be uncertain

- Users find the limited customization options frustrating, as custom policies can only be set via CLI.

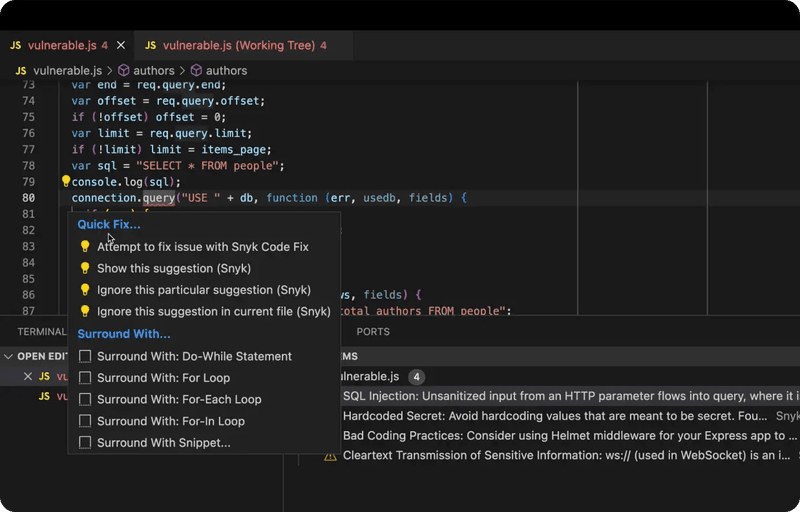

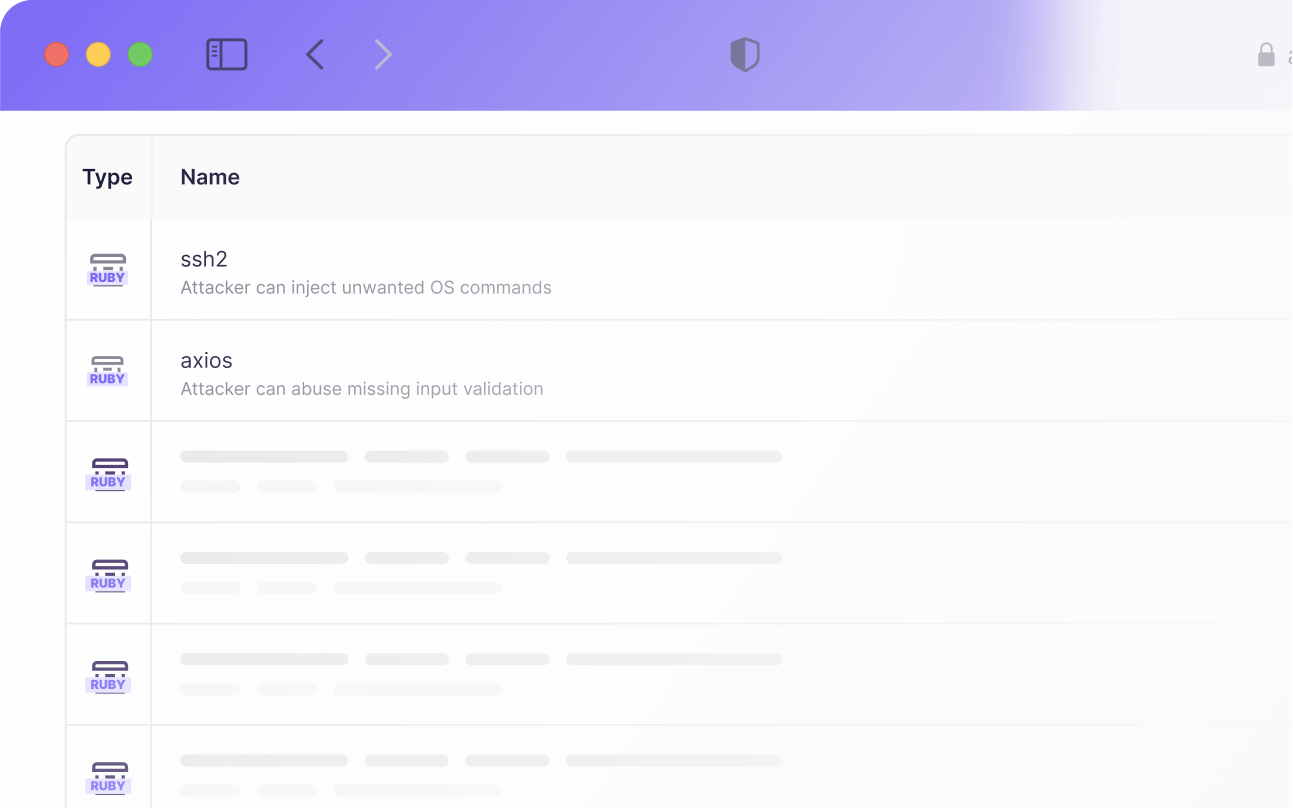

8. Snyk Code | DeepCode

Core AI Capability | Auto Remediation (IDE)

Snyk Code is a SAST tool that can provide code suggestions to developers from within the IDE thanks to DeepCode AI (now Snyk Agent Fix) which Snyk acquired. DeepCode AI utilizes multiple AI models but Snyk's proprietary rules limit transparency and customization (even though it does allow for custom rules). Snyk's commercial tier can be costly for organizations that require coverage across different pipelines and developer teams.

Key features:

- Real-time scanning in IDE / developer workflow: vulnerabilities are flagged as you code, integrated into IDEs, PRs, and CI/CD.

- Remediation suggestions: for many supported findings, Snyk can generate candidate fix snippets that developers can accept, edit, or reject.

- Custom rule writing / queries: users can create custom rules / queries using DeepCode logic (with autocompletion) to tailor scanning to their codebase.

Best for: Development teams already using Snyk / the Snyk platform (SCA, IaC, etc.) who want integrated code scanning

Pros:

- Provides code suggestions to developers within IDEs

- Utilizes multiple AI models trained on data curated by top security specialists.

Cons:

- Limited transparency and customization in its AI / remediation engine

- Cost scaling / licensing issues: many users report Snyk becomes expensive at scale (especially when covering multiple developer teams / pipelines)

- Snyk Agent Fix currently does not support inter-file fixes

- Limited support for major programming languages like Go, C# , C/C++ etc.

- Some reviews mention UI or tool performance concerns, occasional failures or slowness in core engine operations.

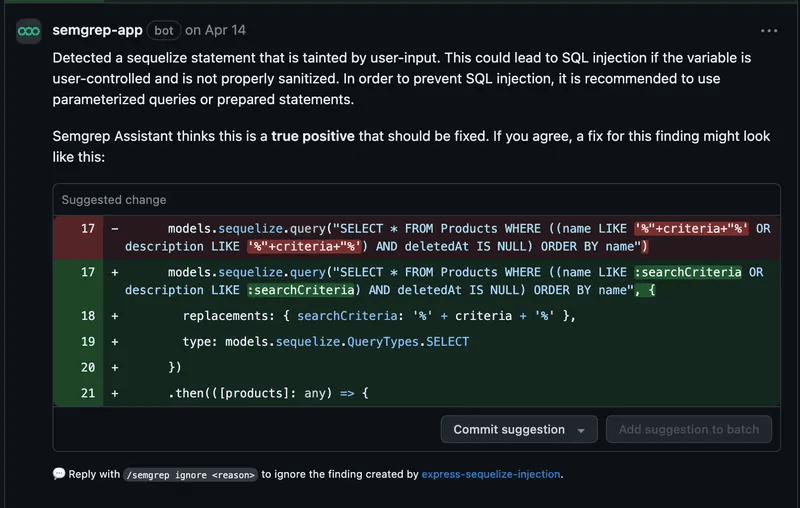

9. Semgrep Code

Core AI Capability | Improved Detection

SemGreps AI assistant, aptly named assistant, uses the context of the code surrounding a potential vulnerability to provide more accurate results and provide recommended code fixes. It can also be used to create rules for SemGrep to enhance its detection based on the prompts you provide.

Key features:

- Context learning with“Memories”: Assistant can remember past triage decisions or organizational context so that similar future findings are triaged more intelligently.

- Component tagging & prioritization: Assistant can tag findings by context (e.g. auth, payments) to help prioritize higher-risk areas.

- Remediation guidance: For many true positive findings, Assistant offers step-by-step human language guidance + code snippets to fix or improve the vulnerable code.

Best for: Teams already in Semgrep ecosystem and want to enhance developer productivity and triage efficiency

Pros:

- Uses AI to provide accurate results and recommended code fixes;

- Can create rules based on prompts.

Cons:

- “Memories” complexity: Managing what the Assistant “remembers” (triage logic, trusted sources) can add cognitive overhead and risk of drift if misconfigured.

- By default, Semgrep Assistant uses OpenAI and AWS Bedrock with Semgrep's API keys. Concerns about privacy, compliance, or cost may arise (although Semgrep offers options and memory controls).

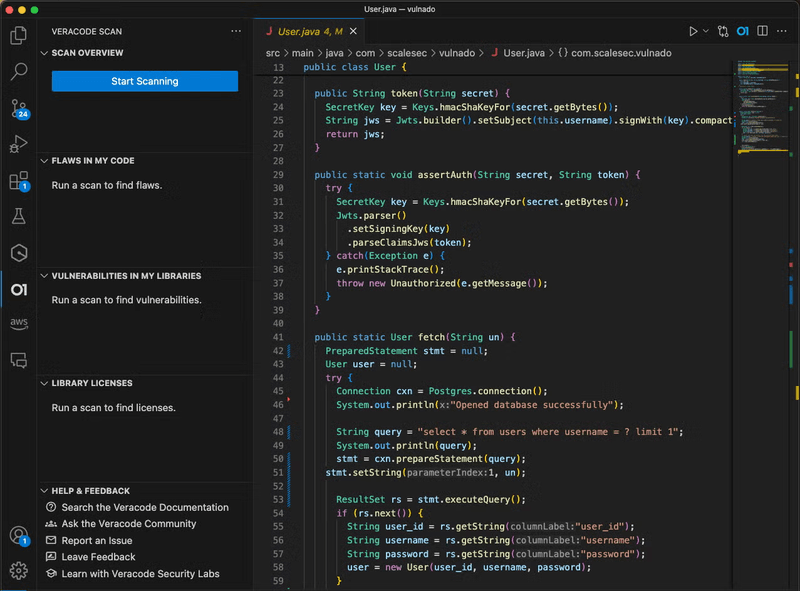

10. Veracode Fix

Core AI Capability | Auto Remediation

Veracode fix uses AI to suggest changes based on vulnerabilities within code when developers are using the Veracode IDE extension or CLI tool. The main differentiator for Veracode Fix is that their custom-trained model is not trained on code in the wild but on known vulnerabilities within their database. The positive of this will be more confidence in suggested fixes, the negative is that it is more limited in the scenarios that it can suggest code fixes.

Key features:

- “Batch fix” mode: Ability to apply top-ranked fixes across multiple findings or files in a directory in one operation

- Integration into the Veracode product ecosystem: Fix is part of Veracode’s IDE scanning, analysis, and reporting stack.

- CI/CD Integration: Seamlessly integrates with CI/CD pipelines, facilitating continuous testing and delivering prompt feedback

Best for: Enterprises using the Veracode ecosystem that want reliable, verified AI-assisted fixes without exposing code to third-party models.

Pros:

- Integration with Veracode’s scanning & reporting means the fixes will align with existing workflows and scans

- The constrained nature of the model reduces chances of wild or unsafe suggestions outside known patterns.

Cons:

- Limited in the scenarios it can suggest code fixes due to its training on known vulnerabilities.

- Over-reliance risk: developers might trust suggestions too much without careful review, especially in subtle or complex parts of the code.

How to Choose a SAST Tool

AI is a relatively new entrant to the security market, and industry leaders are continuously exploring innovative applications. AI should be viewed as a tool to enhance security systems, rather than as a sole source of truth. It's important to note that AI cannot transform subpar tools into effective ones. To maximize its potential, AI should be integrated with tools that already have a robust foundation and a proven track record.

SAST and DAST Tools: Your Application Security Testing Starter Pack

Application security has never been more complex and more critical. AI has made code generation effortless, but that same acceleration demands a new level of vigilance.

You can’t just use traditional SAST tools or DAST tools. You need to fight AI with AI. And you should continue the fight across in the cloud and at runtime.

That’s where Aikido shines.

Beyond SAST and DAST tools, Aikido brings a plethora of AI-driven tools, from vulnerability management to continuous compliance visibility, enabling you to secure your code, cloud, and runtime.

To continue your learning, you might also like:

- Top Code Vulnerability Scanners – Compare broader static analysis tools beyond just AI.

- Top DevSecOps Tools – Discover how SAST fits into a modern DevSecOps workflow.

- Top 7 ASPM Tools – Manage and prioritize findings from your SAST and other scanners.

Secure your software now

.avif)