Why are you here?

You want to know the real answer to two questions about Docker security:

Is Docker secure for production use?

Yes and no. Docker uses a security model that relies on namespaces and resource isolation, making the processes within more secure from specific attacks than running your applications directly from a cloud VM or bare metal system.Despite that layer, there are still plenty of ways for attackers to access your container, allowing them to read confidential information, run denial-of-service (DoS) attacks, or even gain root access to the host system.

How can I improve my Docker security (in a not terribly painful way)?

We’ll walk you through the most common and severe Docker vulnerabilities, skipping over the basic recommendations you’ll find all over Google, like using official images and keeping your host up to date.Instead, we’ll lead you directly to new docker options and Dockerfile lines that will make your new default Docker container deployment far more secure than ever.

The no-BS Docker security checklist

Make in-container filesystems read-only

What do you gain?

You prevent an attacker from editing the runtime environment of your Docker container, which could allow them to collect useful information about your infrastructure, gather user data, or conduct a DOS or ransomware attack directly.

How do you set it?

You have two options, either at runtime or within your Docker Compose configuration.

At runtime: docker run --read-only your-app:v1.0.1

In your Docker Compose file:

services:

webapp:

image: your-app:v1.0.1read_only: true

...Lock privilege escalation

What do you gain?

You keep your Docker container—or an attacker who is mucking about inside said container—from enabling new privileges, even root-level, with setuid or setgid. With more permissive access to your container, an attacker could access credentials in the form of passwords or keys to connected parts of your deployment, like a database.

How do you set it?

Once again, at runtime or within your Docker Compose configuration.

At runtime: docker run --security-opt=no-new-privileges your-app:v1.0.1

In your Docker Compose file:

services:

webapp:

image: your-app:v1.0.1

security_opt:

- no-new-privileges:true

...Isolate your container-to-container networks

What do you gain?

By default, Docker lets all containers communicate via the docker0 network, which might allow an attacker to move laterally from one compromised container to another. If you have discrete services A and B in containers Y and Z, and they don’t need to communicate directly, isolating their networks provides the same end-user experience while preventing lateral movement for better Docker security.

How do you set it?

You can specify Docker networks at runtime or within your Docker Compose configuration. However, you first need to create the network:

docker network create your-isolated-networkAt runtime, add the --network option: docker run --network your-isolated-network your-app:v1.0.1

Or the equivalent option in your Docker Compose file:

services:

webapp:

image: your-app:v1.0.1

networks:

- your-isolated-network

...Set a proper non-root user

What do you gain?

The default user within a container is root, with a uid of 0. By specifying a distinct user, you prevent an attacker from escalating their privileges to another user that can take action without restrictions, like root, which would override any other Docker security measures you’ve worked hard to implement.

How do you set it?

Create your user during the build process or a runtime. At runtime, you can either create the user for the first time, or override the USER you already set at build.

During the build process, in your Dockerfile:

...

RUN groupadd -r your-user

RUN useradd -r -g your-user your-user

USER myuser

...At runtime: docker run -u your-user your-app:v1.0.1

Drop Linux kernel capabilities

What do you gain?

By default, Docker containers are allowed to use a restricted set of Linux kernel capabilities. You might think the folks at Docker created that restricted set to be completely secure, but many capabilities exist for compatibility and simplicity. For example, default containers can arbitrarily change ownership on files, change their root directory, manipulate process UIDs, and read sockets. By dropping some or all of these capabilities, you minimize the number of attack vectors.

How do you set it?

You can drop capabilities and set new ones at runtime. For example, you could drop all kernel capabilities and allow your container only the capability to change ownership of existing files.

docker run --cap-drop ALL --cap-add CHOWN your-app:v1.0.1Or for Docker Compose:

services:

webapp:

image: your-app:v1.0.1

cap_drop:

- ALL

cap_add:

- CHOWN

...Prevent fork bombs

What do you gain?

Fork bombs are a type of DoS attack that infinitely replicates an existing process. First, they reduce performance and restrict resources, which inevitably raises costs and can ultimately crash your containers or the host system. Once a fork bomb has started, there’s no way to stop it other than restarting the container or the host.

How do you set it?

At runtime, you can limit the number of processes (PIDs) your container can create.

docker run --pids-limit 99 your-app:v1.0.1Or with Docker Compose:

services:

webapp:

image: your-app:v1.0.1

deploy

limits:

pids: 99Improve Docker security by monitoring your open source dependencies

What do you gain?

The applications you’ve containerized for deployment with Docker likely have a wide tree of dependencies.

How do you set it?

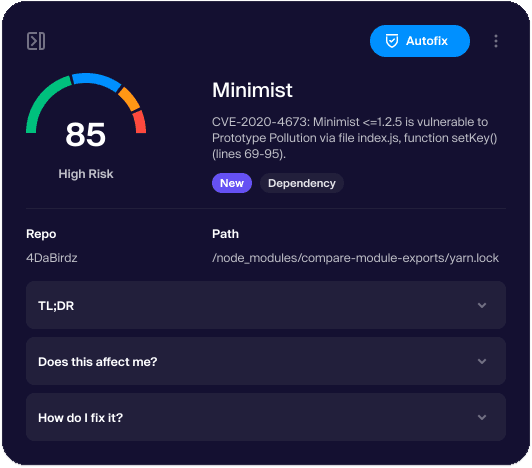

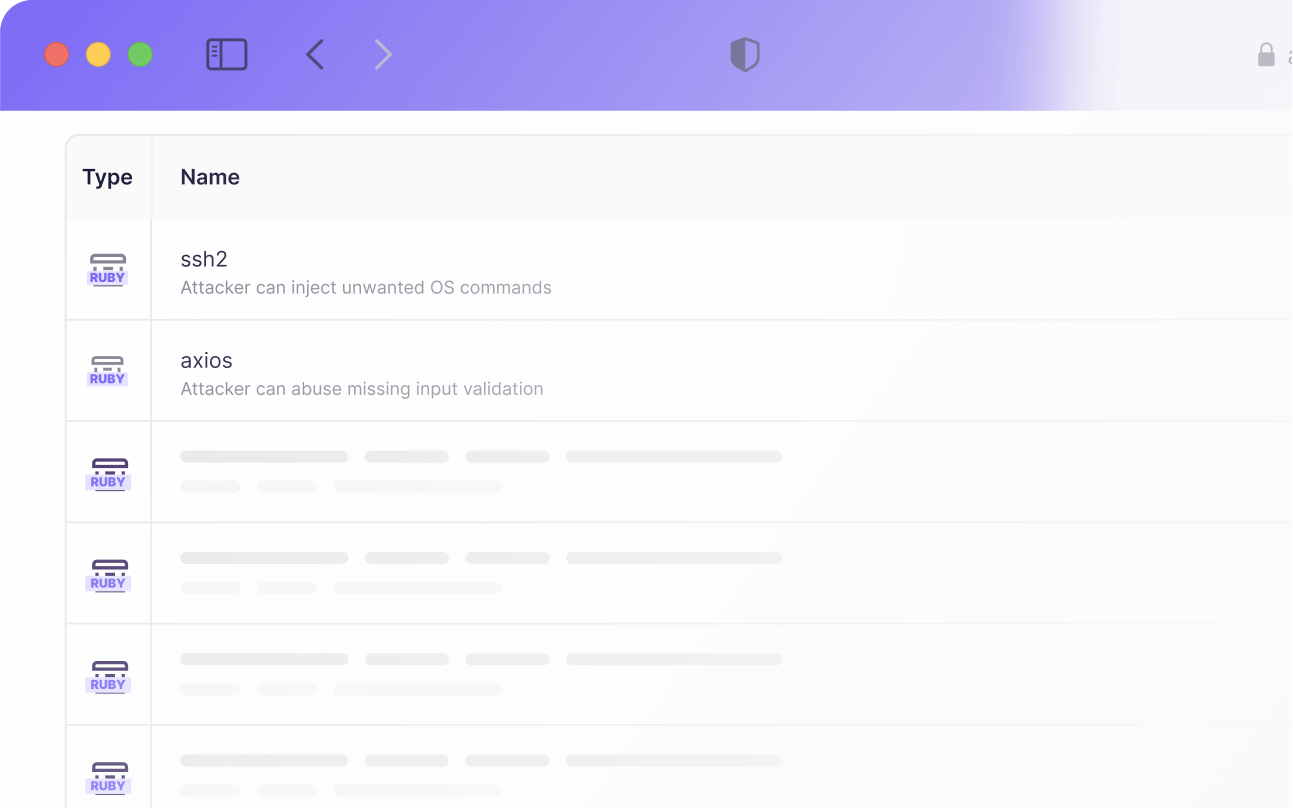

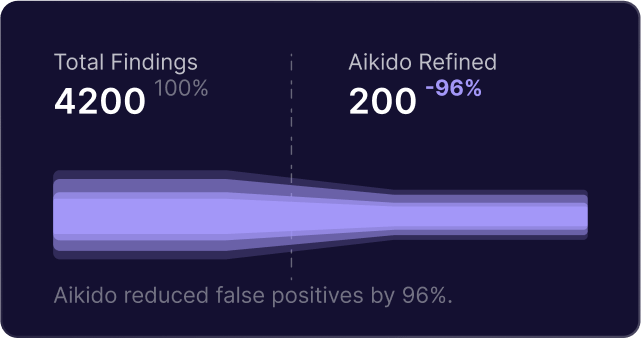

The most “non-BS” way is with Aikido’s open-source dependency scanning. Our continuous monitoring scans projects written in more than a dozen languages based on the presence of lockfiles within your application and delivers an instant overview of vulnerabilities and malware. With automatic triaging that filters out false positives, Aikido gives you remediation advice you can start working with right away… not only after you read a dozen other reference documents and GitHub issues.

At Aikido, we love established open-source projects like Trivy, Syft, and Grype. We also know from experience that using them in isolation isn’t a particularly good developer experience. Under the hood, Aikido enhances these projects with custom rules to bridge gaps and reveal security flaws you wouldn’t be able to find otherwise. Unlike chaining various open-source tools together, Aikido frees you from having to build a scanning script or create a custom job in your CI/CD.

Use only trusted images for Docker security

What do you gain?

Docker Content Trust (DCT) is a system for signing and validating the content and integrity of the official images you pull from Docker registries like Docker Hub. Pulling only images signed by the author gives you more reassurance they haven’t been tampered with to create vulnerabilities in your deployment.

How do you set it?

The easiest way is to set the environment variable on your shell, which prevents you or anyone else from working with untrusted images.

export DOCKER_CONTENT_TRUST=1

docker run ...Or, you can set the environment variable each time you execute Docker:

DOCKER_CONTENT_TRUST=1 docker run …Update end-of-life (EOL) runtimes

What do you gain?

One common recommendation for Docker container security is to pin images and dependencies to a specific version instead of latest. In theory, that prevents you from unknowingly using new images, even ones that have been tampered with, that introduce new vulnerabilities.

How do you set it?

You have some open-source projects available to help you discover EOLs and best prepare. The endoflife.date project (GitHub repository) tracks more than 300 products by aggregating data from multiple sources and making it available via a public API. You have a few options with endoflife.date and similar projects:

- Manually check the project for updates on dependencies your applications rely on and create tickets or issues for required updates.

- Write a script (Bash, Python, etc.) to get the EOL dates of dependencies from the API and run it regularly, like a cron job.

- Incorporate the public API, or that custom script, into your CI platform to fail builds that use a project that’s nearing or reached EOL.

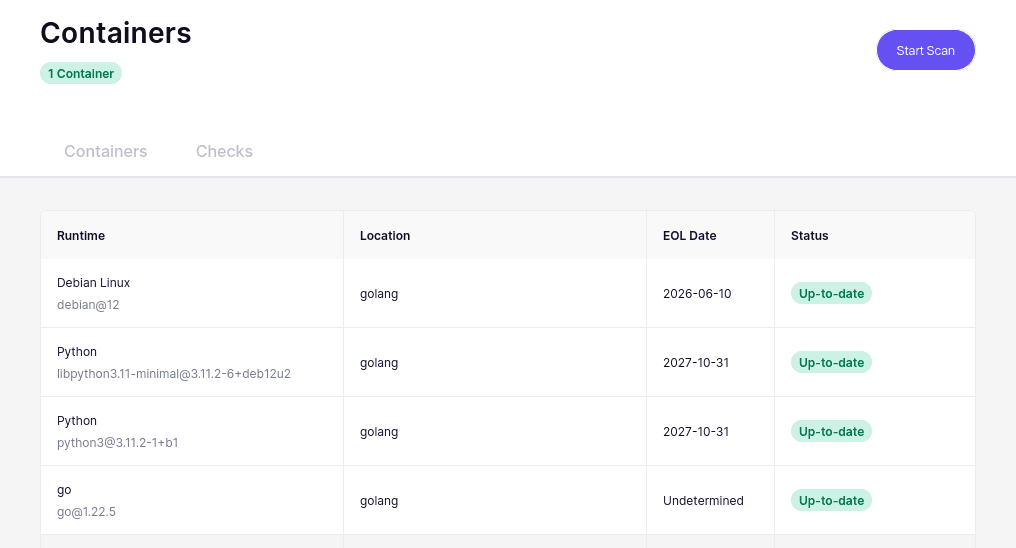

As a developer, we understand that your time is valuable and often limited. This is where Aikido can provide a sense of security—our EOL scanning feature tracks your code and containers, prioritizing runtimes with the most impact and exposure, like Node.js or an Nginx web server. As usual, we not only automate collecting information, but deliver alerts with appropriate severity to inform, not overwhelm you.

Limit container resource usage

What do you gain?

By default, containers have no resource constraints and will use as much memory or CPU as the host’s scheduler. Limiting the resource usage of a specific container can minimize the impact of a DoS attack. Instead of crashing your container or host system due to an Out of Memory Exception, the ongoing DoS attack will “only” negatively impact the end-user experience.

How do you set it?

At runtime, you can use the --memory and --cpus option to set limits for memory and CPU usage, respectively. The memory option takes numbers with g for gigabytes and m for megabytes, while the CPU option reflects the limit of dedicated CPUs available for the container and its processes.

docker run --memory="1g" --cpus="2" your-app:v1.0.1This also works with Docker Compose:

services:

webapp:

image: your-app:v1.0.1

deploy:

limits:

cpus: '2'

memory: 1G

...Your final command and Compose options for Docker security

By now you’ve seen quite a few Docker security tips and the relevant CLI options or configuration to go along with them, which means you’re either quite excited to implement them or overwhelmed with how to piece them all together. Below, we’ve rolled up all the recommendations into a single command or configuration template, which will help you start deploying more secure Docker containers right away.

Obviously, you’ll want to change some of the options—like the non-root user name, kernel capabilities, resource limits—based on your application’s needs.

export DOCKER_CONTENT_TRUST=1

docker run \

--read-only \

--security-opt=no-new-privileges \

--network your-isolated-network \

--cap-drop ALL

--cap-add CHOWN \

--pids-limit 99 \

--memory="1g" --cpus="2" \

--user=your-user \

... # OTHER OPTIONS GO HERE

your-app:v1.0.1You might even want to create a drun alias with your host’s shell you can invoke without having to remember all those details.

function drun {

docker run \

--read-only \

--security-opt=no-new-privileges \

--network your-isolated-network \

--cap-drop ALL

--cap-add CHOWN \

--pids-limit 99 \

--memory="1g" --cpus="2" \

--user=your-user \

$1 \

$2

}Then run your alias like so, with your options and image name: drun -it your-app:v1.0.1

If you’re a Docker Compose kind of person, you can adapt all the same options into a new baseline Docker Compose template you can work from in the future:

services:

webapp:

image: your-app:v1.0.1

read_only: true

security_opt:

- no-new-privileges:true

networks:

- your-isolated-network

cap_drop:

- ALL

cap_add:

- CHOWN

deploy:

limits:

pids: 9

cpus: '2'

memory: 1G

... # OTHER OPTIONS GO HEREBonus: Run Docker with rootless containers

When you install Docker on any system, its daemon operates with root-level privileges. Even if you enable all the options above, and prevent privilege escalation within a Docker container, the rest of the container runtime on your host system still has root privileges. That inevitably widens your attack surface.

The solution is rootless containers, which an unprivileged user can create and manage. No root privileges involved means far fewer security issues for your host system.

We wish we could help you use rootless containers with a single option or command, but it’s just not that simple. You can find detailed instructions at the Rootless Containers website, including a how-to guide for Docker.

What’s next for your Docker security?

If you’ve learned anything from this experience, it’s that container security is a long-tail operation. There are always more hardening checklists and deep-dive articles to read about locking down your containers in Docker or its older and often misunderstood cousin, Kubernetes. You can’t possibly aim for faultless container security—creating time in your busy development schedule to address security, and then making incremental improvements based on impact and severity, will go a long way over time.

To help you maximize on that continuous process and prioritize fixes that will meaningfully improve your application security, there’s Aikido. We just raised a $17 million Series A for our “no BS” developer security platform, and we’d love to have you join us.

Secure your software now

.avif)